Today, most AI discussions still focus on models, capabilities, and recent progress. Far less attention is given to what makes those systems usable, resilient, and trustworthy once they leave the demo stage.

Recent large-scale outages across Europe have not only disrupted services, but exposed architectural assumptions about availability, centralization, and recovery that no longer hold as AI-driven systems depend on this infrastructure. Modern societies now depend on interconnected, software-driven infrastructure, where recovery time matters as much as functionality. This is not a critique of models, but of the systems they operate within.

As AI systems move into energy grids, mobility, industry, and cities, infrastructure and operations stop being a support function and become part of how decisions are constrained, enforced, and recovered. These systems must continue to operate under degraded conditions, partial failures, and delayed information, not just under ideal connectivity and full availability. Recovery is not just about restoring service availability or restarting components but about restoring acceptable system behavior under changed or degraded conditions in AI-driven systems. Modern systems assume components will fail, networks will partition, and data will arrive late or incomplete.

This change in how AI systems are built and operated is why this article focuses on three connected areas: the evolution from DevOps to MLOps and AIOps, the role of recovery as a design requirement, and the introduction of the computing continuum. Together, they describe how infrastructure moves from supporting AI systems to actively shaping their behavior.

From operating software to governing systems

This shift did not start with AI. It started with DevOps.

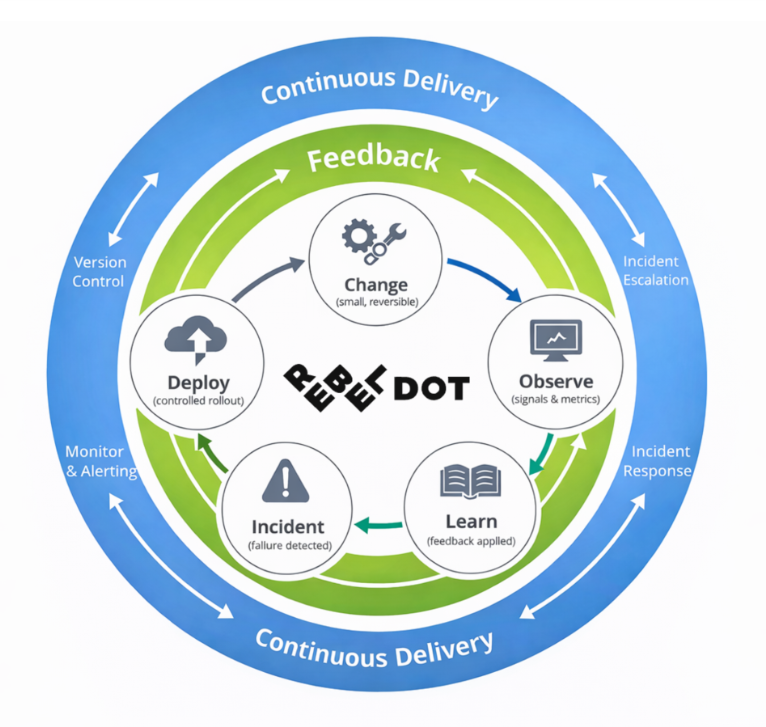

DevOps is a methodology that includes concrete practices and cross-functional teams, especially the integration of infrastructure engineers into product teams. Version control, automated testing, continuous delivery, infrastructure as code, monitoring, and incident response are combined into a single operational feedback loop as shown in Figure 2.

The main idea is making changes small, visible, reversible, and measurable. And when something fails, recover quickly and learn.

AI changes the nature of this loop. Systems are no longer driven only by deterministic code, but by data and models that evolve over time.

Model drift, data shifts, and behavior that changes with context introduce uncertainty that traditional operations were never designed to handle.

How operational responsibility shifts in AI-driven systems

Table 1. Operational responsibility as systems becomes autonomous.

These stages accumulate rather than replace each other. Each new layer of capability inherits the failure modes of the layers below it while introducing new ones of its own. Deployment, configuration, and infrastructure issues remain present in AI-driven systems, while new failure modes are added on top. As a result, failures propagate across layers rather than appearing in isolation. What changes over time is where responsibility sits. As systems become more adaptive and autonomous, infrastructure shifts from executing instructions to governing behavior.

Intelligence across the computing continuum

Here, intelligence refers to the logic that makes decisions in systems, including models, rules, and control mechanisms.

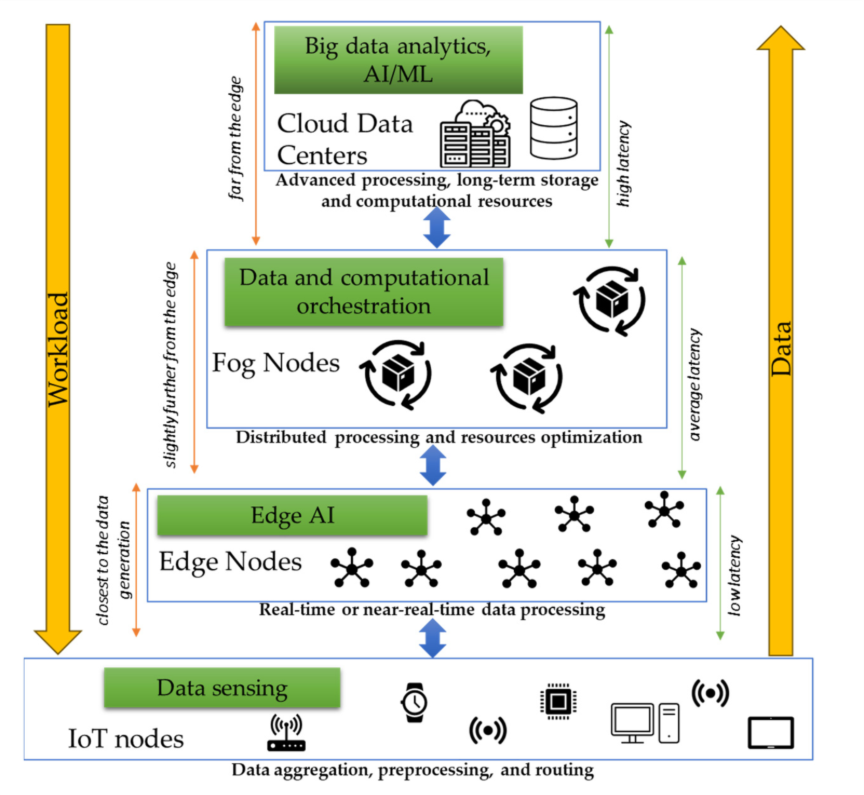

The computing continuum means that intelligence no longer runs in a single place. Instead, work is shared across the edge, the fog, and the cloud, depending on what is needed now.

The edge is where data is produced. This is where fast reactions and local decisions happen, especially when time matters or connectivity is limited. The fog sits between the edge and the cloud. It helps coordinate multiple edge systems, combine information, and keep things running when central services are not available. The cloud focuses on learning, improving models, and handling long-term or large-scale decisions.

As AI systems are used in important areas and real-world conditions are unpredictable, relying only on centralized platforms is no longer enough. Systems must continue to operate and recover even when parts of the infrastructure fail.

Because responsibility, recovery, and autonomy boundaries must be enforced even under failure, architectures naturally move toward a computing continuum as shown in Figure 3. This is not about pushing intelligence to the edge but about placing decisions where failure and recovery can be controlled. The main challenge is no longer just deploying software, but deciding where intelligence runs, how it moves between layers, and how the system restores stability when things go wrong.

What defines success in 2026

Success in 2026 will not be measured by the sophistication of individual models, but by how AI-driven systems behave under stress, uncertainty, and failure.

Infrastructure and operations become the mechanisms through which responsibility is enforced. Trust in AI systems is not a property of models alone, but of the architectures and recovery mechanisms that constrain them. These mechanisms define how systems degrade, how autonomy is bounded, and when human intervention is required.

Engineers should not deploy AI systems whose failure modes cannot be understood, constrained, and recovered from. The most important work happens beneath the models. It is this work that determines which AI systems can be trusted at scale.

This article presents these changes at a trend level. In future articles, each of these areas will be explored in more technical depth:

- “how operational responsibility evolves from DevOps to MLOps and AIOps”,

- “why recovery must be treated as a design requirement in AI systems”, and

- “how the computing continuum shapes autonomy, failure, and control boundaries”.

They show what is required for AI systems to be trusted in production environments.

References and inspiration

- Google SRE Book - Postmortem Culture: Learning from Failure

- Humble, J., Farley, D. - Continuous Delivery: Reliable Software Releases through Build, Test, and Deployment Automation

- Arcas, G. I., Cioara, T. , Data Orchestration Platform for AI Workflows Execution Across Computing Continuum (CLOSER 2025)